Summary

Meta Description: Unlock higher conversions with our guide to A/B testing for landing pages. Learn how to form a hypothesis, choose what to test, and analyze results.

At its core, A/B testing a landing page is simple: you pit two versions of a webpage against each other to see which one performs better. You show version A (the control) to one group of visitors and version B (the variation) to another. Then, you analyze the data to see which headline, call-to-action, or design actually convinces more people to convert.

Ready to see how it works? Let's dive in.

Why A/B Testing Is Your Key to Higher Conversions

Are you still guessing what your audience wants? It's time to stop. A/B testing empowers you to make decisions based on what your audience does, not what you think they'll do. It transforms your landing page from a static brochure into a dynamic, high-performance conversion machine.

Even small, data-backed tweaks can lead to huge lifts in leads, sales, and sign-ups. If you're a SaaS company trying to get more free trial sign-ups, a simple headline change could be worth thousands in recurring revenue. This is why smart marketers see testing as a core part of their growth strategy.

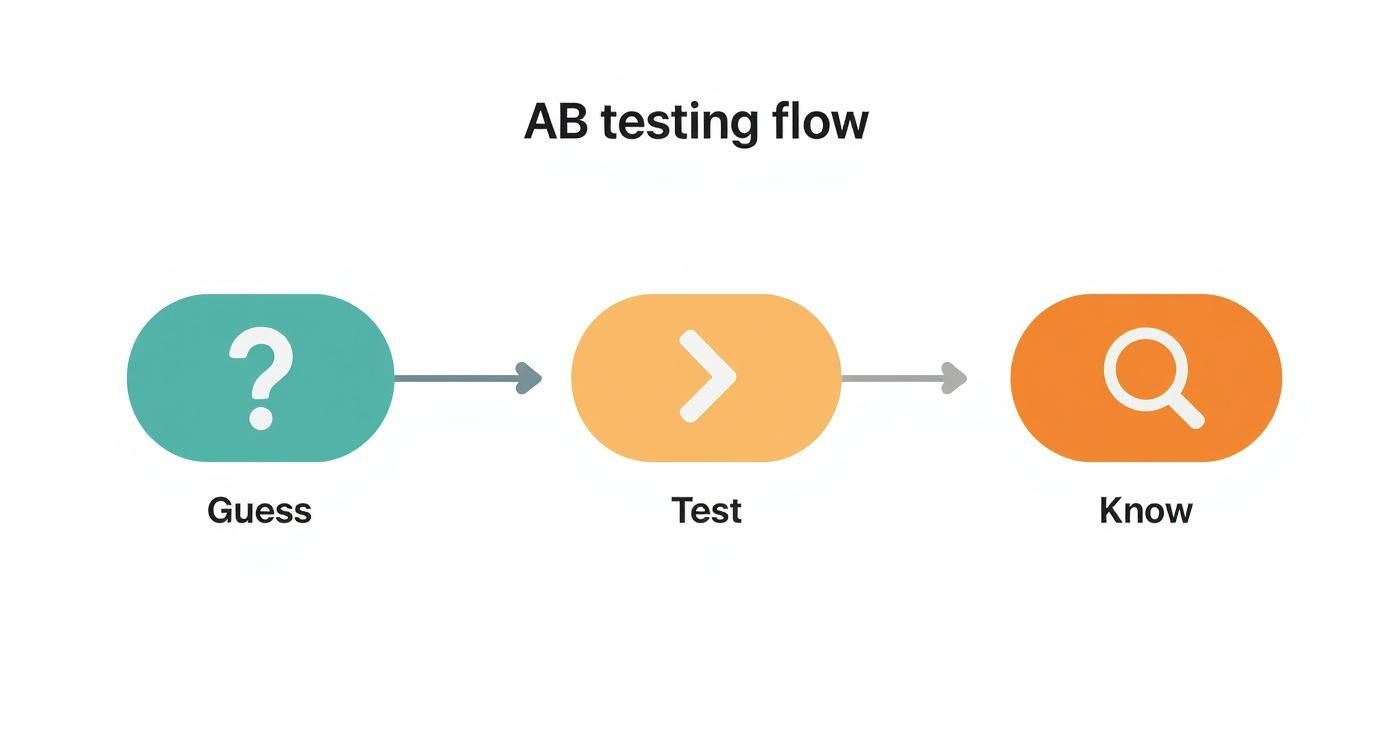

From Assumptions to Certainty

Every marketer has an opinion on what works. But opinions don't pay the bills—data does. A/B testing cuts through the noise and boardroom debates, replacing guesswork with cold, hard evidence.

Instead of arguing over whether a blue or green button will perform better, you can just test it and know. This cycle of testing, learning, and optimizing creates a powerful feedback loop that consistently nudges your conversion rates in the right direction. This approach is a critical piece of broader Conversion Rate Optimization Strategies that fuel business growth.

Unlock a Competitive Edge

Chances are, your competitors are still operating on assumptions. By implementing a structured testing process, you gain a real advantage. You’ll understand your customers on a much deeper level: their preferences, pain points, and the exact words that make them click.

The proof is in the numbers. Research shows 77% of businesses use A/B testing on their websites, and 60% are doing it on their landing pages specifically. But here’s the kicker: the same data from VWO reveals that only about 1 in 8 tests produces a statistically significant winner. This just underscores how important it is to be strategic with your tests.

When done right, A/B testing delivers a direct return by:

- Increasing Conversion Rates: The most obvious win. You get more people to take the action you want them to take.

- Lowering Customer Acquisition Costs: By converting more of the traffic you already have, you effectively lower the cost to acquire each new customer.

- Improving User Experience: Testing helps you pinpoint and remove friction points on your landing page that frustrate users and kill conversions.

When you commit to a culture of testing, you’re committing to a culture of continuous improvement. It’s a smarter, more effective way to market.

How to Build Your First Landing Page Test

Launching your first A/B test can feel intimidating, but breaking it down makes the process manageable. The entire exercise is about swapping your assumptions for data-backed decisions. Instead of throwing random changes at a page, a structured approach ensures you learn something valuable from every experiment—win or lose.

This process simplifies the A/B testing journey into three core phases: forming an educated guess, running a controlled test, and then digging into the results.

Ultimately, this flow reminds us that testing isn't just about finding a "winner." It's about building a repeatable system for truly understanding your audience.

Start With a Strong Hypothesis

Every successful A/B test starts with a solid hypothesis. This isn't a wild guess; it’s an educated statement rooted in data, user feedback, or observed behavior. A great hypothesis follows a simple formula: "If I change [X], then [Y] will happen because [Z]."

For instance, a weak hypothesis is: "Let's test a new button color." It's lazy and directionless.

A strong hypothesis sounds like this: "Changing our CTA button color from grey to high-contrast orange will increase form submissions because it will grab more user attention and create a clearer visual path."

See the difference? This gives your test a clear purpose and a measurable outcome. Dive into your analytics. Where are people dropping off? Are they clicking on things that aren't links? This user behavior data is a goldmine for test ideas.

Your hypothesis is your roadmap. Without it, you're not testing; you're just making changes and hoping for the best.

Choose the Right Tools for the Job

Once you have a hypothesis, you need the right tech to run the test. The market is filled with options for different needs and budgets. The good news? You don't need the most expensive platform to get started.

Here’s a quick rundown of your options:

- Integrated Platform Tools: Many marketing platforms like HubSpot or Unbounce have A/B testing features baked in. If you're already using one, this is often the easiest route.

- Dedicated Testing Platforms: Tools like VWO, Optimizely, or Convert Experiences are the heavy hitters. They offer powerful features for more complex experiments but come with a steeper learning curve.

- Free and Entry-Level Tools: While Google Optimize has been sunsetted, some platforms offer free tiers or limited plans that are perfect for your first few tests.

The best tool for you boils down to your technical comfort, budget, and test complexity. For a simple headline or CTA test, an integrated tool is usually more than enough.

Create and Validate Your Variation

Now for the fun part: building your "B" version—the challenger to your current page. This is where you bring your hypothesis to life.

Whether you’re swapping an image, rewriting a headline, or redesigning a form, stick to changing one key element at a time. If you change the headline, CTA, and image all at once, you’ll have no idea which change actually moved the needle.

Attention to detail matters here. Following landing page design best practices can help ensure your variation is built on a solid foundation.

Before you launch, you must validate your new page version. A broken variation can tank your test and leave you with useless data. Make sure everything displays correctly across different browsers and devices. You can use a simple tool to test your HTML and catch glaring errors before they impact your traffic.

Configure Your Test Settings

This is the final checkpoint. You need to dial in the test parameters in your chosen tool. Don't rush this step.

Here are the key settings to get right:

- Traffic Allocation: For a standard A/B test, you'll want a 50/50 split. Half your audience sees the original page (version A) and the other half sees your new variation (version B).

- Conversion Goals: What action counts as a "win"? This could be a form submission, a button click, or a purchase. Define this goal clearly in your software so it knows how to track performance.

- Audience Targeting: Who should see your test? Is it for all visitors, new visitors only, or just traffic from a specific ad campaign? Be specific to ensure your results are relevant.

With your hypothesis formed, variation built, and settings configured, you're ready to hit "launch." Now comes the hardest part: waiting patiently for the data to roll in.

Identifying What to Test for Maximum Impact

So, you're ready to run a test. But where do you start? Not all A/B tests are created equal. You could spend months tweaking button colors with little to show for it, or you could test one headline and double your sign-ups.

The key is to focus your energy on the elements with the highest potential to influence a user's decision. A strategic approach to A/B testing for landing pages ensures your efforts actually move the needle.

Headlines and Value Propositions

Your headline is the first thing most visitors read. You have about three seconds to convince them to stick around. This is your prime real estate.

Don't just test small wording changes—go big. Test completely different angles. For example, does a question-based headline like "Struggling to Generate Leads?" outperform a benefit-driven one like "The Easiest Way to Generate 50% More Leads"? What story does your headline tell?

Pro Tip: Your headline's job isn't just to describe your product. It's to articulate the visitor's problem and promise a clear, immediate solution.

Call-to-Action Text and Design

The call-to-action (CTA) is the finish line. It's the one button you need people to click. Because this is where the final conversion decision happens, even small changes can have a massive impact.

Get specific with your CTA copy. "Submit" is generic and uninspiring. Try testing something that communicates value, like "Get My Free Ebook" or "Start My 14-Day Trial." The language should reflect what the user is getting, not what they are giving.

Beyond the text, consider the design:

- Color and Contrast: Does your button stand out or blend in? High contrast is almost always better.

- Size and Shape: A tiny button is easy to miss, especially on mobile. Test a larger, more prominent button.

- Placement: Is your CTA clearly visible "above the fold," or do users have to hunt for it?

Hero Images and Videos

The main visual on your landing page sets the emotional tone. A generic stock photo of people in a boardroom tells visitors nothing. A powerful visual should either show your product in action or help the user envision a better future with your product.

Try testing a static image against a short, compelling video. The impact can be huge. While median conversion rates for landing pages hover around 2.35%, the top performers often exceed 11.45%. Elements like video play a major role in this, as they can improve message retention by up to 95%.

Social Proof and Trust Signals

People are naturally skeptical online. Social proof is your secret weapon for easing their anxiety and building credibility. But what kind of social proof works best for your audience?

That’s a perfect question for an A/B test. You could pit different forms of proof against each other to see what resonates.

- Testimonial vs. Case Study: Does a short, punchy quote from a happy customer outperform a link to a detailed case study?

- Client Logos vs. Star Ratings: Is a wall of recognizable client logos more persuasive than an average star rating?

- "As Seen On" vs. Awards: Does mentioning features in major publications build more trust than showcasing industry awards?

Each test helps you understand what makes your audience feel safe and confident enough to convert.

Page Layout and Form Length

Sometimes, the biggest wins come from testing the overall structure of your page. In a famous test, Highrise found that a longer, more detailed landing page increased their conversions by 37.5%. This defies the old marketing wisdom that "shorter is always better."

The ideal length depends on your product's complexity and price point. For a simple email sign-up, a short form is best. But for a high-ticket B2B service, a longer page that answers every possible objection might be the clear winner.

And don't forget to question your form length. Does asking for just an email address get more sign-ups than asking for a name, email, and phone number? You might get more leads with a shorter form, but they may be lower quality. Testing helps you find that perfect balance. While A/B testing focuses on one variable, you can learn more about testing multiple elements in our guide to what is multivariate testing.

Prioritizing Your A/B Test Ideas

To decide where to start, map out your ideas based on their potential impact versus the effort required.

This framework is a great way to think strategically. Aim for high-impact, low-effort tests first to get quick wins and build momentum.

How to Analyze Your Test Results Accurately

So, you've launched your A/B test. Now comes the moment of truth: digging into the data to find out what your audience actually prefers. The real gold is buried in the results, but it's easy to get lost. Let's talk about how to read the story your data is telling you.

The goal is to look past surface-level vanity metrics. A solid landing page testing strategy focuses on numbers that directly impact the business—like form submission rates or the click-through rate on your main CTA. Tracking these across both versions gives you a clear, side-by-side comparison. For a deeper dive, check out this great resource on landing page testing metrics from Knak.com.

Understanding Statistical Significance

Have you ever seen one version pull ahead after the first day and felt the urge to declare it the winner? Not so fast. The single most important concept here is statistical significance.

In plain English, this is a mathematical way to measure how confident you can be that your results aren't a fluke. Most testing tools show this as a "confidence level," typically as a percentage. The industry standard is to aim for a 95% confidence level or higher before making any decisions.

Reaching 95% statistical significance means there's only a 5% chance the difference you're seeing is due to random luck. If you act on anything less, you're essentially flipping a coin.

Patience is your best friend. Don't end a test just because one variant is "winning" after 48 hours. Let it run long enough to gather a meaningful sample size.

Key Metrics That Matter Most

When you open your results dashboard, your job is to zero in on the numbers that relate to your original hypothesis. What should you look for?

- Conversion Rate: This is your North Star. It's the percentage of visitors who completed your desired action. This is almost always the ultimate tie-breaker.

- Bounce Rate: Is your variation's bounce rate soaring? That's a huge red flag. It could mean the new design is confusing or turning people away.

- Time on Page: If the winning version also has a longer time on page, it's a good sign that your new content is more engaging.

Many modern testing platforms, which we cover in our guide to landing page optimization tools, will lay this data out clearly. The trick is to see how these secondary metrics support your main conversion goal.

When to Call a Winner (And When Not To)

You can confidently declare a winner when two things have happened: your test has reached statistical significance (95% or more), and you've run it for at least one full business cycle (usually one to two weeks).

But what if the results are flat? An inconclusive test isn't a failure—it's a lesson. It tells you that the element you changed didn't move the needle for your audience. That's incredibly valuable information! It stops you from wasting time on tiny tweaks and pushes you to test a bolder hypothesis next time.

Document Everything You Learn

This is the most overlooked—and most valuable—step in the process. Every test you run, whether it's a huge win, a total flop, or a draw, adds to your library of customer insights.

Create a simple spreadsheet to log every experiment. You don't need anything fancy, just track these key things:

- Hypothesis: What did you think would happen and why?

- Screenshots: A simple before-and-after of the control (A) and the variation (B).

- Results: The final conversion rates, confidence levels, and other key data.

- Learnings: What did this teach you? Boil it down to a single sentence takeaway.

Over time, this knowledge base becomes your secret weapon. It stops you from repeating failed tests and helps you build a real, sustainable culture of optimization.

Common A/B Testing Mistakes to Avoid

Even the sharpest strategy can fall apart due to simple errors. I've seen seasoned marketers fall into these common traps time and again. Knowing these pitfalls before you start is the best way to get reliable data from your ab testing for landing pages.

Think of this as your pre-flight checklist. Running through these points before you launch can save you from wasting valuable traffic on a flawed test.

Calling the Test Too Early

This is, without a doubt, the most common mistake. You launch a test, and after 24 hours, version B is crushing it. The temptation to declare victory is huge.

Don't do it.

A single day's data is almost never enough. User behavior fluctuates wildly throughout the week. The people visiting your site on a Monday morning are likely in a different headspace than those browsing on a Friday night.

For instance, a B2B software page probably gets most of its qualified traffic during weekday business hours. If you call the test on a Tuesday, you've ignored how your weekend audience behaves. Run your test long enough to capture a full business cycle—for most companies, that means at least one full week, if not two.

Testing Too Many Variables at Once

Another classic blunder is turning an A/B test into a complete redesign. You get excited and decide to change the headline, hero image, CTA button, and customer testimonials all at once.

When your new version wins, you're ecstatic—but you've learned nothing. Was it the new headline? Or that one compelling testimonial? You'll never know.

The goal of A/B testing isn't just to find a winner; it's to understand why it won. Isolating a single variable is the only way to build a real, repeatable understanding of your audience.

Stick to one major change per test. If you have many ideas, that's what multivariate testing is for. But for a standard A/B test, simplicity is your friend.

Forgetting About External Factors

Your landing page doesn't exist in a vacuum. The outside world can have a massive impact on your traffic and conversion rates, and if you don't account for it, your results can be completely skewed.

Think about these scenarios:

- Seasonality: Are you testing a travel landing page during a holiday rush? Those results will be inflated.

- Marketing Campaigns: Did your email team launch a huge blast or a new ad campaign mid-test? A sudden flood of traffic will contaminate your data.

- PR and News Cycles: Did your company get a shoutout in a major publication? That wave of referral traffic will behave differently.

Before you start any test, check the marketing calendar. Find a "clean" window to run your experiment where external factors won't muddy the waters.

Ignoring Statistical Significance

Just because one version has more conversions doesn't automatically make it the winner. The difference could easily be random chance. This is where statistical significance comes in.

It’s the mathematical checkpoint that tells you if your results are legit or a fluke. Most testing tools, like VWO or Optimizely, will tell you when you've reached a confidence level of 95% or higher. This is the industry standard for a reason.

Acting on a result with only 80% confidence means there's a 1 in 5 chance you're wrong. Don't let impatience lead to a bad business decision. Wait for the math to back you up.

A Few Common Questions About Landing Page A/B Testing

Still have questions? Let's tackle some of the most common ones so you can move forward with clarity.

How Long Should I Actually Run an A/B Test?

This is the classic "it depends" question, but here are solid guardrails. Forget about a fixed number of days; what you need is enough reliable data to make a smart decision.

Your test is ready to be called when you’ve hit two critical milestones:

- Statistical Significance: You need to reach a confidence level of at least 95%. Anything less, and you're just guessing.

- A Full Business Cycle: Let it run for at least one full week, but two is even better. This smooths out fluctuations you see between weekdays and weekends.

Seriously, one of the biggest mistakes is calling a test too early. Patience is the name of the game.

What if My Test Results Are Inconclusive?

It's easy to feel like you've failed when a test ends in a statistical tie. But an inconclusive result isn't a failure—it’s a valuable insight. It tells you that the element you tested, like a button color or tiny headline tweak, wasn't important enough to change user behavior.

An inconclusive test is a clear signal to think bigger. It frees you from obsessing over minor changes and pushes you to form a bolder hypothesis for your next experiment.

Don't get discouraged. Take it as a learning moment and swing for the fences on your next test.

Do I Have Enough Traffic for A/B Testing?

You don't need a massive audience, but you do need enough volume to get a clear winner in a reasonable time. If your landing page only gets a couple of conversions a week, you could be waiting months for a result.

As a general rule of thumb, aim for a page that gets at least 1,000 unique visitors and 100 conversions per variation within about a month.

If your numbers are lower, don't sweat it—just shift your strategy. Instead of testing tiny tweaks, go for dramatic, high-impact changes. A small audience is much more likely to react to a complete overhaul than a slightly different shade of blue.

Can I Test More Than One Thing at a Time?

You can, but then it's not an A/B test anymore. When you change multiple things at once—like a new headline, a different hero image, and a revised CTA—you're running a multivariate test.

The problem is, you lose clarity. If the new version wins, you'll be left wondering why. Was it the headline? The image? The button text? You'll never know which element moved the needle.

For clean, actionable insights, stick to testing one variable at a time.

Ready to stop guessing and start building landing pages that convert? With LanderMagic, you can create dynamic, personalized post-click experiences that are optimized from the start. Discover how LanderMagic can supercharge your Google Ads performance today.